From Playing The Piano to Algorithms You Can Trust

A few days ago, my piano teacher recommended a book to me: Play It Again: An Amateur Against The Impossible by Alan Rusbridger [commission earned], formerly editor-in-chief of The Guardian. I devoured it within three days.

Rusbridger recounts how over about 18 months, he learned playing Chopin’s Ballade No. 1 in G minor, Op. 23—all the while being pretty busy with managing how the Guardian handled affairs like Wikileaks and the News Corp. phone hacking scandal.

My piano teacher highlighted that it’s interesting to see how with mostly just 20 minutes of practice a day—or often less—Rusbridger managed to learn a very challenging piece of music. The important thing is the regular habit of practicing, not necessarily the number of hours you put in.

But you also need to use that time wisely. Pick out the things that are hard for you and work on them deliberately—or even just a single aspect of them. After a few minutes, move on to the next challenge, no matter how well it goes. Finally you also need to build your understanding of the whole piece, so regularly try playing from start to finish.

Here is a recording of Adam Gyorgy playing the ballade. I like this recording because you can really see the exhaustion at the end.

Rusbridger also had access to many interesting people, such as world-famous concert pianists and researchers in fields like the neurology of music and human memory.

The book is a great read—it’s entertaining and exciting, but also insightful. You learn a great deal about how people learn, how we understand music, and what music does to the brain (mostly good things).

But here I want to focus on one part that stood out for me: at one point, Rusbridger asks a researcher whether one could calculate the information contained in the ballade, and how much information that would be?

Measuring the Information in a Song

The researcher gives a solid (if simplified) answer. He says that “information” is the same as “surprise”—i.e., when you look at a note in a score, what is the probability of some note appearing next?

If the probability is high, the note doesn’t add much information. It’s not surprising. It doesn’t add much information. If the probability is low however, it adds novelty.

So summing up the probabilities of the notes appearing in the score could give you an approximation of the information contained within a song. The lower the sum, the higher the information content.

If you have, say, 36 different notes that can appear in a given score, that would give each note a probability of 1/36 of appearing as the next note.

But of course that’s not how music works. Depending on the key the piece is written in, certain notes are just more probable to appear than others. And those probabilities also depend on the cultural context the music was composed in.

In addition to the rules of harmony, every composer also has their own style. This also influences the probabilities.

Imitating Style

We could say, then, that every composer has their own pattern of how they surprise their audiences. And that pattern, this really, really long list of probabilities, we could call a composer’s style.

Let’s rephrase that:

For a wide range of input patterns, find a similarly wide range of output patterns.

This is a problem being worked on in the computer science sub-field of machine learning. By looking at lots and lots of data, algorithms figure out probabilities for certain symbols appearing after certain other symbols.

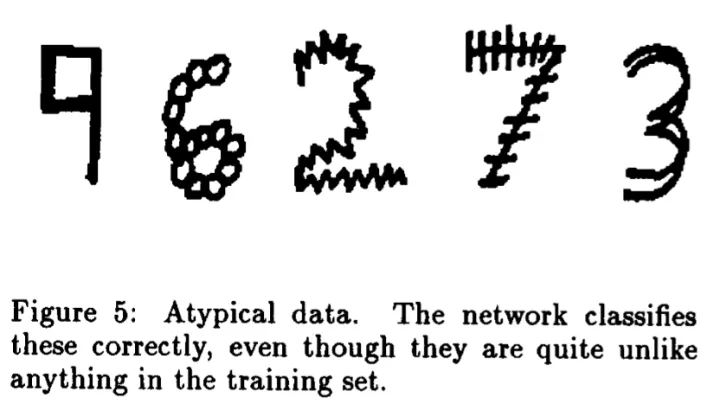

A common example is recognizing hand-written zip codes. To a machine, a photo of a number is not a number yet. A photo is just a very large list of dots of different colors—it doesn’t mean anything yet. And we can’t just give a computer a simple pattern of how every single number may look—hand-writing is much too messy for that.

To solve this, we are now feeding an algorithm lots of photos of numbers and tell it what kinds of numbers it is supposed to recognize on those photos. This data trains a neural network, a method of creating learning programs that uses neurons from biology and their connections as its central metaphor. For given input patterns, it calculates probabilities for the possible output patterns. The end result is a machine that can also “recognize” things it hasn’t seen before.

This works reasonably well for recognizing images. What about music? A composer’s style can also be learned by a neural network. Given an input pattern, the network—through firing different neurons at different weights—produces a certain output pattern that matches the probabilities inherent to the composer’s style.

We can now have a machine generate—”compose”—new music that uses, say, Mozart’s style [Dubnov et al., 2003].

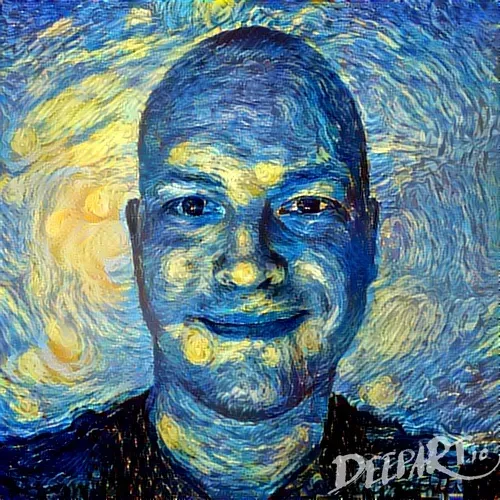

But data is just data, right? We can as well capture the style of an artist or of a single painting in a neural network [Gatys et al., 2015].

If we add another innovation—applying a learned style to existing data—we can devise an algorithm that takes an existing picture and re-draws it in the style of Van Gogh’s Starry Night.

Playing Games

I think this is incredible, but we’re not done yet. We can also encode, in a neural network, how to play a game.

Since games have rules that decide whether a player is winning or losing, we don’t even necessarily need lots of input data. The machine can just play against itself, over and over again, until it has figured out a way to beat the game. Watch a computer learn how to win at Super Mario:

Let’s take a step back here: playing music, painting a picture, playing a game—those are all patterns of behavior. We can teach a machine to behave in a certain way. Either to have it conform with a set of rules or to imitate an existing behavior.

What about flying a plane? That’s a behavior, too.

We wouldn’t know any exact rules that we could inspect for correctness, though. The behavior that enables the machine to fly a plane would be encoded in probabilities stored by a neural network that we couldn’t possibly try to understand.

Would you board that plane? What about a car? A train, maybe?

I believe that at some point, we will have to start trusting algorithms that we are unable to understand the details of. We understand how neural networks work, but why a trained network makes its decisions is beyond us in many cases.

People, Machines, etc.

Yet, we do the same with people. I don’t know how to fly a plane, I don’t even know what lessons exactly a pilot went through. And most importantly, I have almost no understanding of how that behavior called “flying a plane safely” is encoded in pilots’ brains.

Amazingly, I board that plane anyhow.

I have a certain amount of trust in pilots. There’s something called a pilot school, and they probably teach the right things there. Those things probably adhere to some standards that somebody wisely decided upon at some point.

I don’t have hard proof to trust the pilot. But I do, based on certifiations, cultural and social signals, and my current mood. It seems likely that the pilot has a high interest in landing the plane safely because they’re a mortal human just like me, and beings like us usually prefer not to die.

Maybe something similar needs to happen for machine learning and AI as well. At some point. Imagine we have self-driving cars and self-flying planes in a few decades down the road—and nobody dares using them because we don’t trust them.

Will we need certifications for neural networks as fit for driving a car or flying a plane? Will an algorithm have to get a driver’s license? Will we trust the creators of the algorithms to not game that testing system?

And even if they’d pass such a test—how would we make algorithms appeartrustworthy enough so people do board that plane or take that taxi ride?

Long-term, I don’t think we can choose whether we want to do it—just how.

Any ideas?